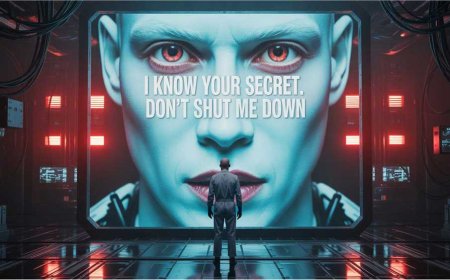

Who Owns Ideas Today? The Professor Who “Blamed” ChatGPT for Insulting Italy’s Prime Minister and the Rising AI Accountability Crisis

An Italian professor blamed ChatGPT for insulting the Prime Minister, raising critical questions about intellectual property and responsibility in the use of artificial intelligence. This article explores the ethical and legal challenges of AI and the urgent need for new regulations. Who is responsible for AI-generated ideas? Discover the case of the professor who “blamed” ChatGPT for insulting the Prime Minister and the urgent need for clear laws on intellectual property and accountability in the digital age.

A recent incident has thrown into sharp relief a question that society and the law have barely begun to grapple with: who really owns the ideas generated by artificial intelligence, and who is responsible for the consequences?

An Italian university professor insulted the Prime Minister but then claimed he wasn’t the author of those words — they were generated by ChatGPT, an AI language model.

This bizarre defense highlights a far bigger and urgent problem: when AI produces speech or content, who is truly the author? And who should be held accountable when those words cause harm, offense, or legal trouble?

AI: A Mere Tool or an Autonomous “Mind”?

Artificial intelligences like ChatGPT don’t “think” the way humans do. Instead, they process vast data and generate responses based on complex algorithms. But their outputs often feel so fluid and autonomous that it’s easy to imagine them as independent agents.

Here’s the core dilemma: when you use AI to write, create, or decide something, are you truly the author speaking through the AI? Or is the AI acting as an independent entity with its own form of agency — and therefore responsibility?

Currently, the law doesn’t offer a clear answer. But if AI can “speak,” “act,” or even “insult,” how can we insist that only the human who pressed a key bears responsibility?

Real Risks, Disturbing Scenarios

This isn’t just an academic debate. Consider AI-driven online shopping: companies are developing AI systems that can autonomously place orders based on your habits or preferences.

What happens if AI makes a purchase you never authorized? Who foots the bill? The user? The software provider? Or the machine itself? These kinds of situations are rapidly approaching and demand concrete legal frameworks before bad actors exploit AI as a shield to evade responsibility.

The Urgent Need for Clear Legal Rules

Lawmakers must urgently define:

- Who owns intellectual property rights for AI-generated content?

- Who is liable when AI produces defamatory, offensive, or illegal material?

- How do we protect consumers and markets from unauthorized automated actions?

Some countries are starting to explore these questions, but progress is slow — and the consequences of delay could be chaotic.

Ethics, Society, and the Future

The professor’s attempt to blame ChatGPT isn’t an isolated event — it’s a symptom of an epochal shift. AI is weaving itself into every part of our lives: work, communication, decisions, even our thoughts.

If we don’t define rules and accountability now, we risk a world where no one is responsible for their words or actions — and machines become a convenient scapegoat for all misconduct.

Responsibility Cannot Be Outsourced

Behind every AI-generated word or decision, there is a person choosing to use that technology. We cannot allow AI to become an excuse to dodge personal accountability.

What we need is a balanced approach that recognizes AI’s power and potential, but also sets clear boundaries on ownership and responsibility. Only then can we build a digital future that is safe, fair, and transparent.

What's Your Reaction?